Integrating LiDAR into self-driving vehicles is undoubtedly the most discussed topic in the field of intelligent driving in 2021. It’s a uniform understanding of the industry that the excellent perception capability of 3D LiDAR will make autonomous driving safer and more comfortable.

However, the questions are how much the added LiDAR can help to improve the perception capability of the whole intelligent perception system? Will it meet the expectation of the industry? How exactly is the overall performance of the multi-sensor fusion system of 3D laser LiDAR, camera and millimeter-wave radar?

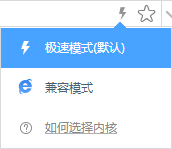

△The real-time point cloud of automotive-grade solid-state LiDAR M1, which will start mass production and delivery to OEMs in Q2

The best way to figure out the above questions is to evaluate the system's performance.

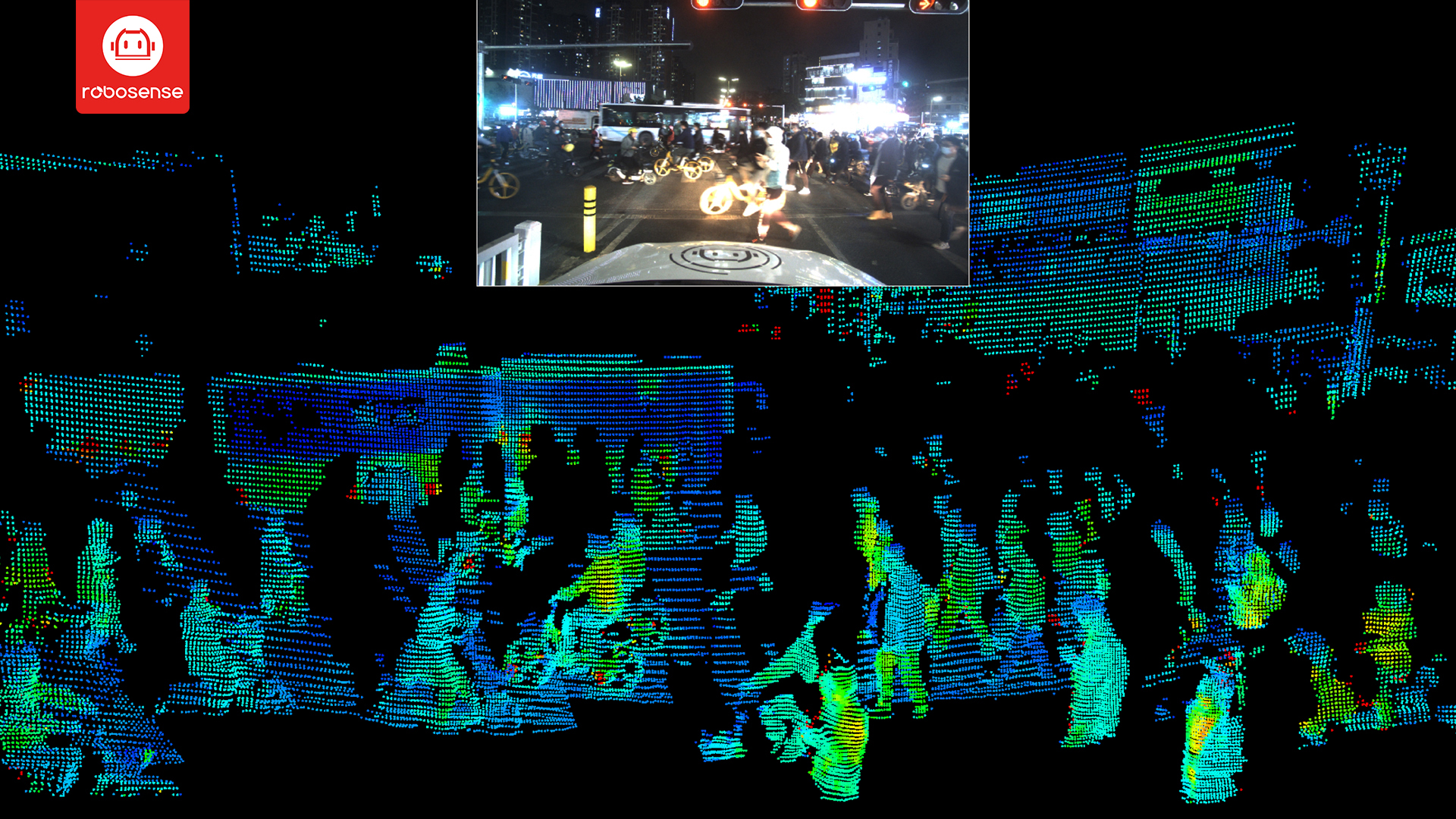

△In the development model of automotive projects, R&D and design, and test & verification take the equal share

In the strict development system of the automotive industry, the process of testing and verifying performance is as important as the R&D and design process. This process of objectively testing and uating the performance of the perception sensor system based on the project requirements and goals. can clearly point out the development direction at each key phase and keep the project in the state of "Know How To Do".

LiDAR's reliability and AI perception detection performance are higher than that of cameras and millimeter-wave radars. The standards and tools used in the evaluation of cameras and millimeter-wave radars do not meet the requirements of uating LiDAR.

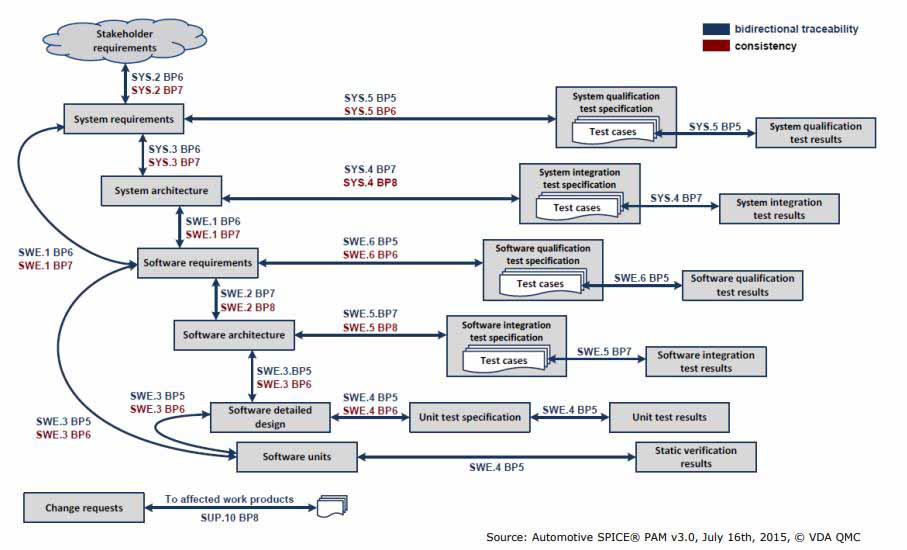

△ The real-time perception performance based on automotive-grade solid-state LiDAR M1, whose detection performance is better than the camera and millimeter-wave radar

Therefore, a set of high-level evaluation standards and tools is required for the evaluation of LiDAR and sensor fusion systems.

Where the evaluation system can be compared to an exam, the ground truth data will be the "reference answer" to the evaluation result of the perception system.

Therefore, the accuracy of ground truth data must be significantly higher than the results given by the device under test (DuT) in all aspects including detection performance and geometric precision.

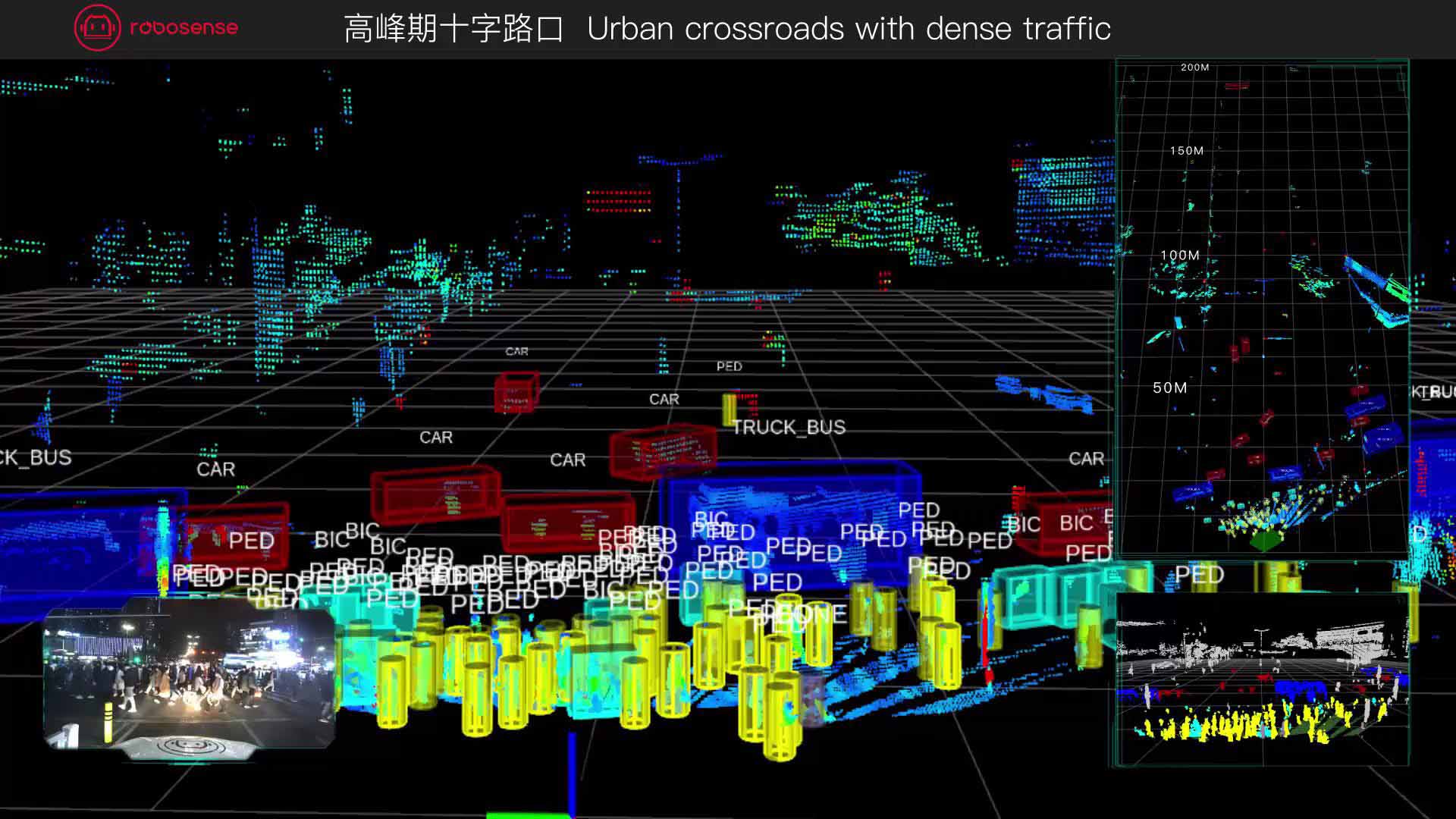

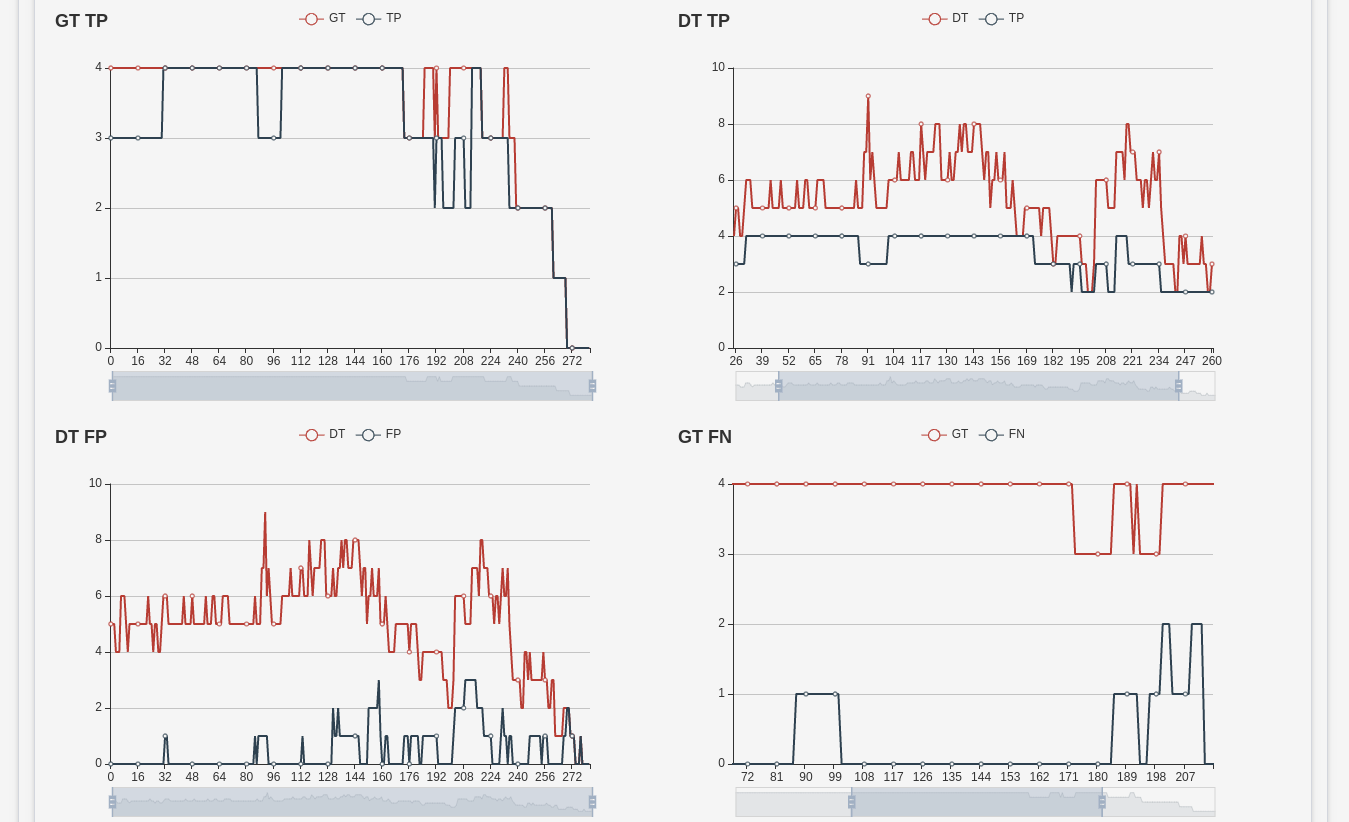

△The picture is the analysis of the detection performance of the perception system under test based on ground truth data. The meaning of the abbreviations in the figure are as follow: GT (ground truth), TP (the true targets detected by the system under test), DT (the overall targets detected by the system under test), FP (the false targets detected by the system under test), FN (the missing targets detected by the system under test)

The ground truth data, usually stored in the PB-Level, includes dynamic information such as obstacle type, speed, and location, and static information such as lane lines and road boundaries.

During the constant and rapid development iteration, the requirements for ground truth data are always renewing along with the testing-and-R&D upgrading cycles. Therefore, the efficiency of ground truth data generation must keep up with the development requirements.

How to obtain high-quality PB-level ground truth data?

As mentioned above, data labeling quality and data generation efficiency are the two key aspects when considering ground truth data.

The traditional manual labeling method takes an extremely long time and a huge cost to label the targets frame-by-frame. It takes three years for staff to process the amount of data collected in just one day, where the labeling rate is about 1:1000 (data collected in one day takes about 1,000 days to label). The efficiency of manual labeling is too low to meet the frequently d testing requirements.

In terms of labeling capabilities, the manual labeling method has significant defects: due to the frame-by-frame static data labeling method, the motion speed and acceleration of dynamic objects cannot be labeled. Nevertheless, the shape of objects cannot be completely reconstructed in a single frame data, (such as vehicles in front are usually marked as an L-shape in the single frame of point cloud), therefore, the accuracy of the size of bounding boxes cannot be guaranteed.

The RS-reference 2.1 can efficiently evaluate the performance of the LiDAR and multi-sensor fusion system.

The RS-Reference was launched to market in 2016 when the automotive-grade MEMS solid-state LiDAR RS-LiDAR-M1 project was established. The RS-Reference has been used and recognized by many global top OEMs and Tier1s.

△The iteration history of the RS-Reference. Through continuous optimization and upgrade, the system now has rich evaluation function modules, and software tool chain.

The RS-Reference provides a set of intelligent ground truth generation and evaluation solution, which can output detection performance and geometric error indicators with a labeling efficiency close to 1:1. This is significantly more accurate than real-time perception, manual labeling and traditional labeling tools.

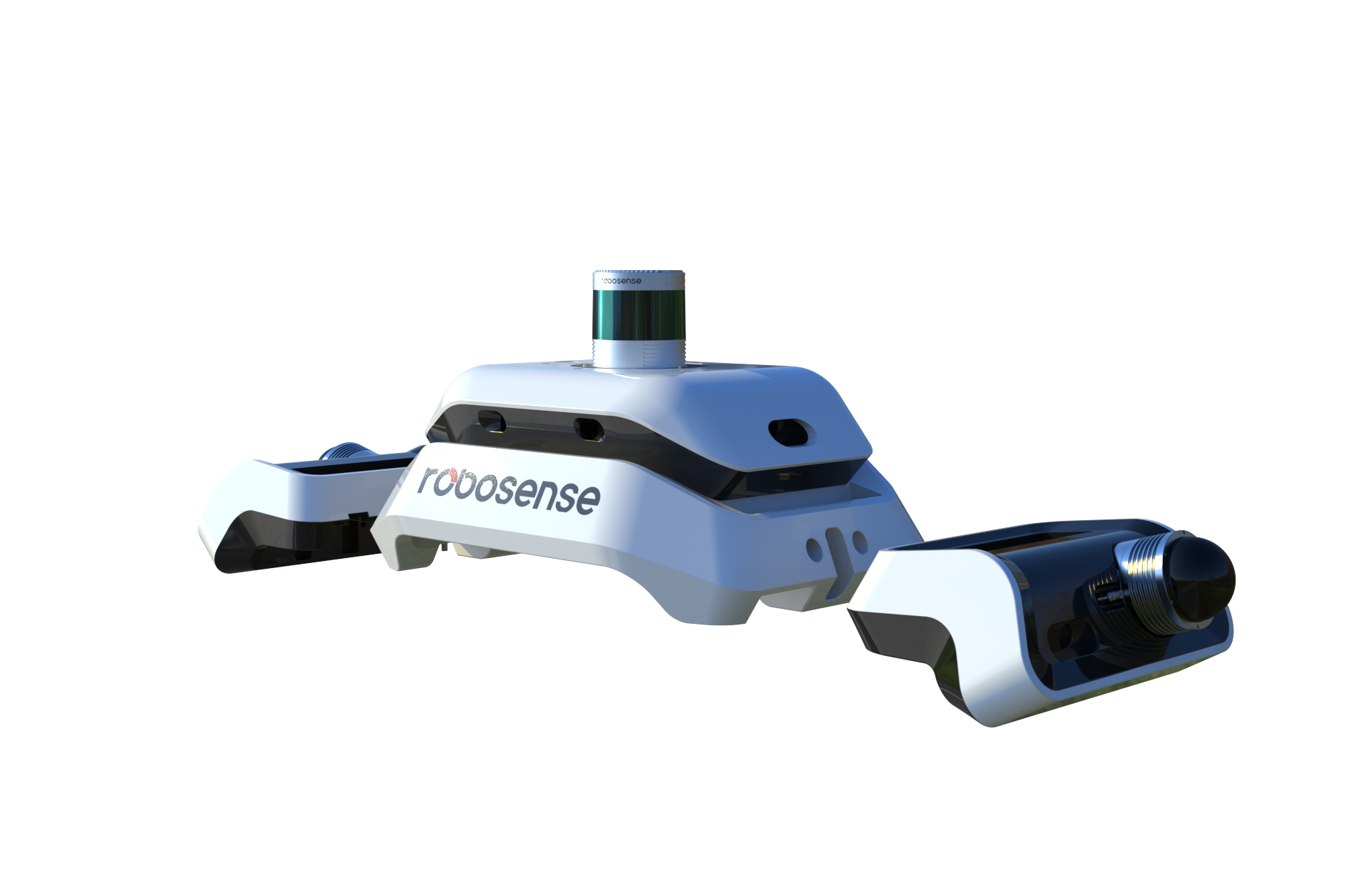

△The appearance of the latest version of RS-Reference 2.1

Ø High-performance and high-maturity data collection sensor system

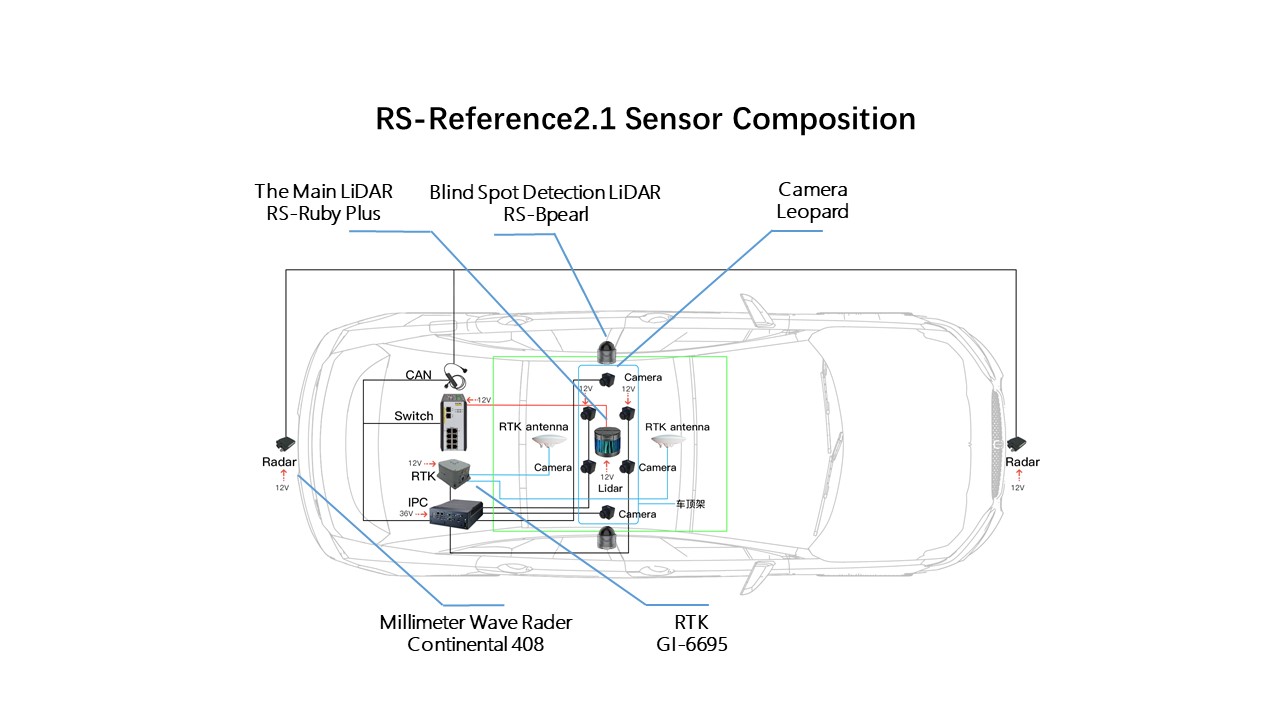

The RS-Reference system includes the RoboSense 128-beam LiDAR RS-Ruby, Leopard camera, Continental 408 millimeter-wave radar, GI-6695 RTK, and has added two RoboSense RS-Bpearl LiDAR for near-field blind spots detection in the 2.1 version. The whole system can achieve full coverage and perception redundancy, reliability and accuracy of original environment data.

Ø Detachable roof-mounted deployment requires no vehicle body modification

The RS-Reference system can adapt to different vehicle sizes, and does not occupy the sensor installation position of the DuTs. It can directly evaluate the intelligent driving system that is consistent with the sensor sets of commercial vehicles.

△The latest version of RS-Reference 2.1 adopts detachable roof-mounted deployment

Ø Highly mature perception algorithm and dedicated offline processing mechanism

The perception is the key for smart labeling to outperform manual labeling, it is responsible for the extraction of ground truth data. The RS-Reference system uses a customized and dedicated offline perception algorithm, with over 13 years’ accumulated experience of LiDAR sensing algorithm development.

The RS-Reference performs a “whole process tracking and identification" for each obstacle data, and extracts all ground truth data from each frame. It can keep up with the speed and acceleration labeling, and accurately delineate the size of the bounding box with comprehensive shape and size information. The system is also able to accurately differentiate obstacles that are in close proximity in complex scenes.

△The visualized ground truth data in complex scene generated by RS-Reference 2.1

△ The video of visualized ground truth data in complex scene generated by RS-Reference 2.1

Ø Full-stack evaluation tool chain

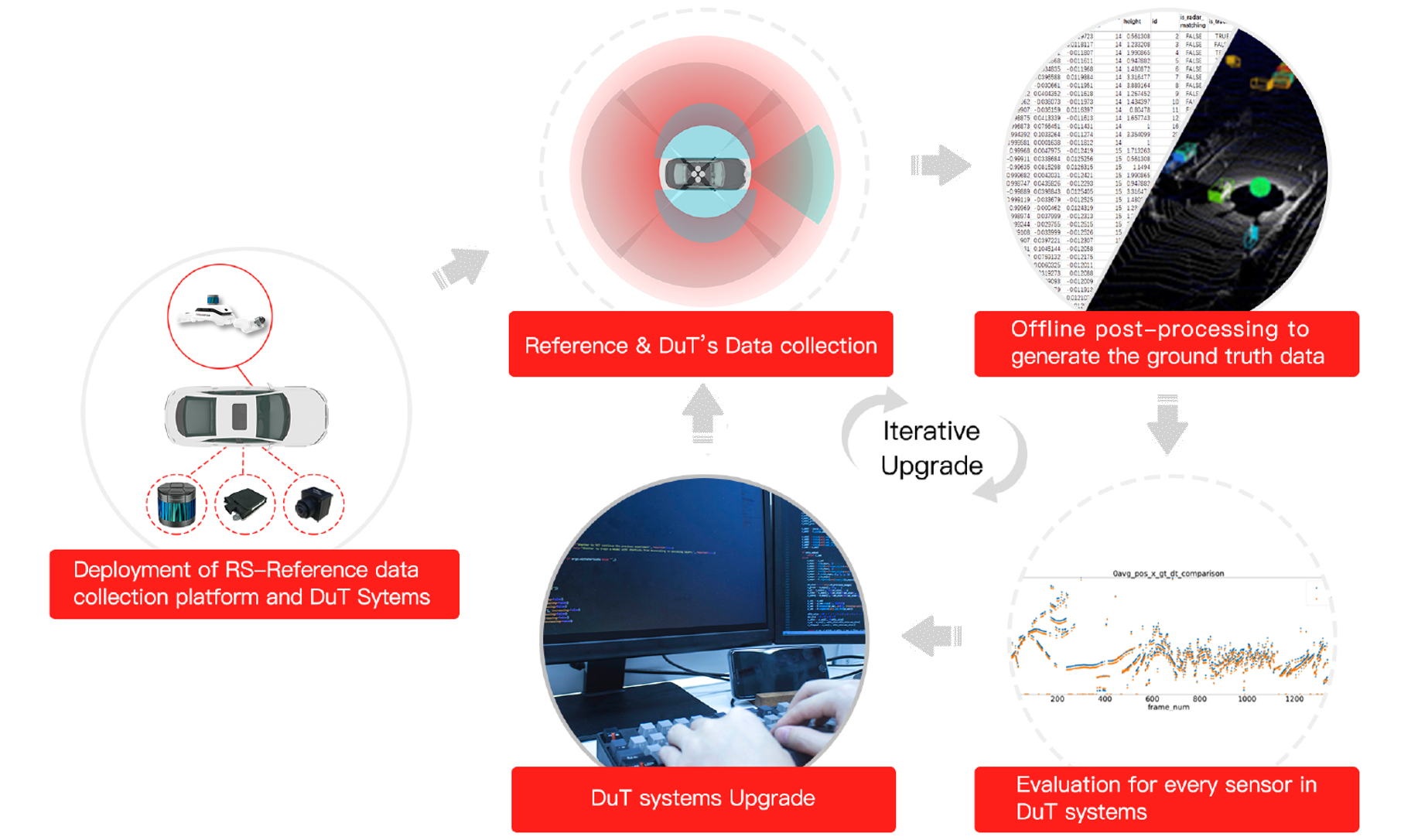

The evaluation process of sensor perception performance can be roughly divided into four major steps: data collection, offline processing to generate ground truth data, DuT evaluation and analysis, and DuT optimization and upgrade. The evaluation process is usually repeated for multiple times until a satisfactory perception solution is obtained.

△ Evaluation process of perception system development

The full-stack tool chain of the RoboSense RS-Reference includes data collection tools, sensor calibration tools, visualization tools, manual correction tools, evaluation tools, etc. The 2.1 version has upgraded the data management platform and has added the scene semantic labeling function that serves every step of the evaluation process.

△RS-Reference provides a full-stack tool chain for ground truth data generation and evaluation

Ø Evaluate each sensor in the multi-sensor fusion system

The RS-Reference can not only evaluate the fusion results of intelligent driving perception fusion system, but also provide dedicated evaluation tool modules based on the acteristics of different types of sensors such as LiDAR, millimeter-wave radar, and camera. Moreover, customized tool modules can be developed according to customers’ requirements to carry out in-depth analysis of the performance of perception system.

Next, in the 2.2 version of the RS-Reference, we will also release the evaluation tool modules for road signs, lane line categories, scene semantic recognition and other projects.

Ø Huge potential in extended applications of RS-Reference

Support for planning and controlling algorithm development: with the help of RS-Reference, developers of intelligent driving systems can build a massive driving scenes database to support the development of the core algorithm of the “planning and controlling” system in parallel with the development of the perception system. This way, to a large extent, has overcome the constraints of sequential development of “perception→planning→control”, and can greatly accelerate the project development process.

Empowering simulation: The massive ground truth data of real scenes generated by the RS-Reference can be used to construct simulation scenes. In this way, not only the simulation scenes can get a reliable source of data, but also the authenticity of the data is greatly improved.

Evaluation of roadside perception systems: Based on the powerful ground truth data generation capability and evaluation tool chain, the RS-Reference can also evaluate the perception performance of roadside sensing system of the Vehicle-to-Infrastructure (V2I) projects, to advance the development and deployment of smart transportation projects.

ØHigh Recognition from global top OEMs and Tier1

Since its launch, the RS-Reference has been used by many top OEMs and Tier1s globally to solve sensing problems of many smart driving projects, and has won high recognition and a large number of orders from customers.

△Many OEMs and Tier1 began to use RS-Reference at the early stage of the development of the intelligent driving perception system project, and gradually formed a large-scale test fleet

About RoboSense

RoboSense (Suteng Innovation Technology Co., Ltd.) is a world-leading provider of Smart LiDAR Sensor Systems. With a complete portfolio of LiDAR sensors, AI perception and IC chipsets, RoboSense transforms conventional 3D LiDAR sensors with comprehensive data analysis and interpretation systems. Its mission is to innovate outstanding hardware and AI capabilities to create smart solutions that enable robots, including autonomous vehicles, to have perception capabilities superior to humans.